In class, we discussed several uses for game theory, particularly with respect to congestion games — one of which was a basic example involving minimizing travel times given a certain set of paths we may take.

In the real world, this might involve many more parameters, particularly where we can no longer generalize a route, but rather must include nodes along a road to better represent congestion and so forth.

This piqued my curiosity — where else can we make use of congestion games and game theory itself? It turns out that a novel solution for maximizing throughput over wireless networks for which IoT (Internet-of-Things) devices require access is one of them, and the details are fascinating.

One of the problems with IoT devices is their need for reliable, low-bandwidth communication so that they may send off sensor data, receive small amounts of data for control signals, and other applications that require relatively slow, but reliable wireless communication.

As a result, many such Internet-of-Things devices communicate with the IEEE 802.15.4 standard — technologies that you may have heard of like Zigbee, and more recently, Thread, make use of this protocol. One of the defining features of it are its small data transmission rates (up to 31.25 kilobytes a second) and its range (about 10 metres in the worst case).

This protocol, due to its limited range allows for multi-hop communications across devices. The authors find an apparent problem — what if a node or IoT device is happy to send data through its neighbours, but refuses to forward packets for other nodes? The crux of it is that we wish to “incentivize” these rogue nodes to help, by way of punishing them for not cooperating with its neighbours, for such nodes cause increased energy usage, worse network throughput and other undesirable outcomes. (Nobahary, Garakani, Khademzadeh, et al., Introduction)

What the authors propose

In short, the authors propose a game theory centric approach, where nodes identify each other using “introduction” packets that let neighbours know of its existence and vice versa. Then, in an inifinitely-repeating, multiperson game, the utility is (in summary) a function of “how much help” a neighbour has been to the current node, and “how much help we have given” to the neighbouring node.

During the game, nodes send data through their neighbours until packets reach the destination (i.e., the base station/router). If a neighbour cooperates with a node (i.e., forwards to/from the current node), then its reputation increases, and we will be further inclined to help when the neighbouring node needs it. If, however, the cooperation is limited (slow transmission, for instance), or the neighbouring node refuses to cooperate by not forwarding packets, then we become less inclined to help it.

When we speak about “reputation,” we are referring to the amount help we have received from a node versus the amount of help we have given it. By detecting nodes that are uncooperative, its neighbours reduce its priority while prioritizing nodes that are cooperative, which produces a set of nodes who all have a vested incentive to help each other. This is shown to have a net positive effect on network throughput. (Nobahary, Garakani, Khademzadeh, et al., Proposed method)

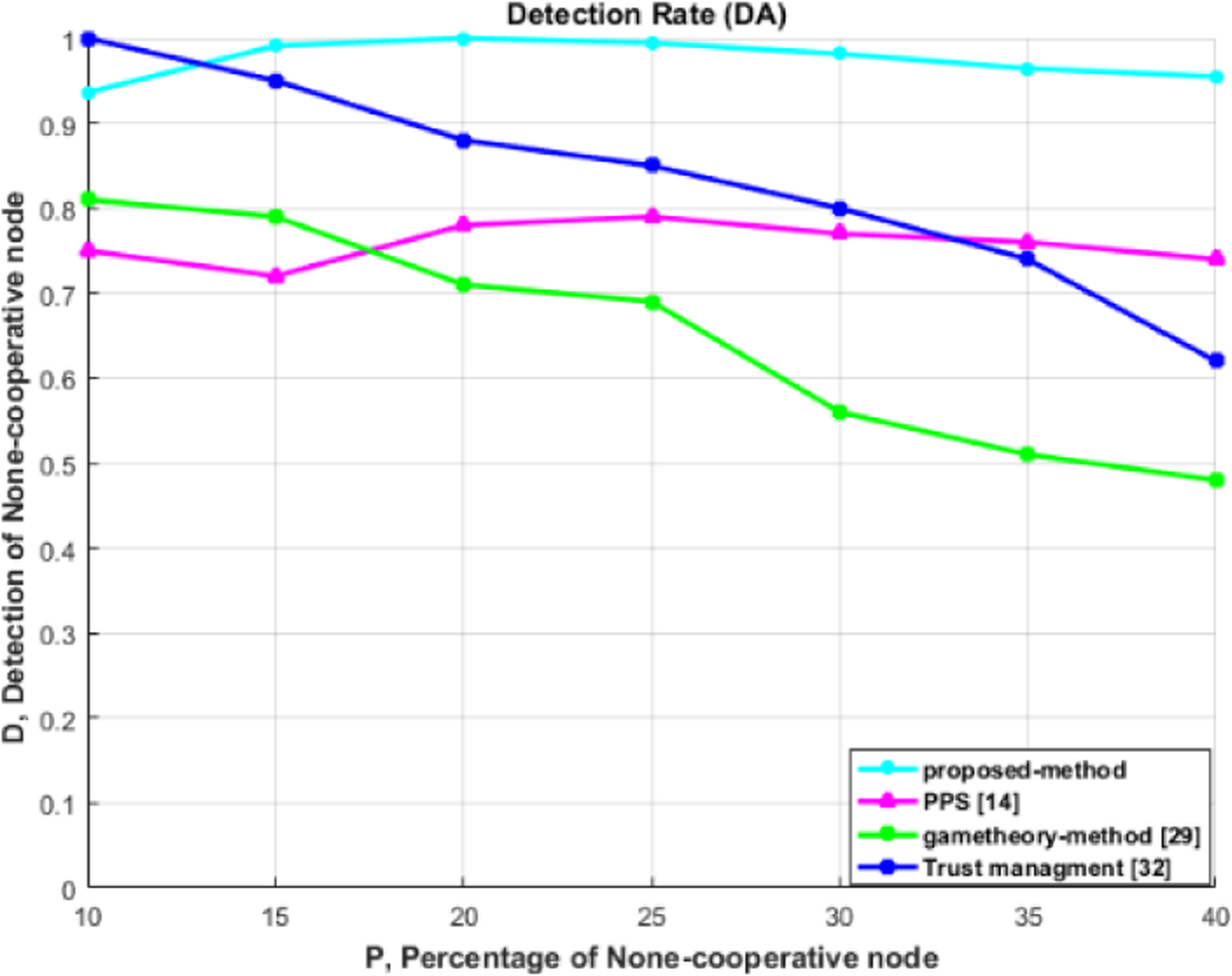

A notable result is that the modified game-theory approach (the proposed method) is signifcantly more effective than a game theory approach described in a paper in 2013. The difference appears to be in that the original paper describes a game that has nodes individually determine probability of packet forwarding rather than communicating this information throughout the network. (Sun, Guo, Ge, Lu, Zhou and Dutkiewicz, §4)

Commentary

One of the benefits of prioritizing nodes, or IoT devices that are willing to cooperate indeed is a network that has generally higher throughput. However, one of the issues we face is that there is not necessarily an easy way to know when a node is not acting “selfishly,” but rather that it cannot provide assistance due to, for instance, its positioning. As a result, we end up with nodes that are deprioritized because of their lack of “reputation,” even if due to the circumstances it is placed in. A potential option would then be to have multiple base stations, where each base station communicates whether or not a certain node is within range. If a node is neither within range of any of the base stations, then its energy usage is discounted, which is communicated to the edge nodes.

Game theory with IoT device communication is not a new discovery — there have been many attempts before this one (though not to trivialize on the authors’ findings!) to make use of it to optimize wireless networks for such devices. The novelty of this particular article is its approach in punishing uncooperative players, by considering in the utility function neighbours’ past performance. Yet, there is still a Nash equilibrium present, because the authors make use of an infinitely repeating game, and the equilibrium is established only at each step.

Of interest is its applicability in large areas, particularly in urban environments where we might want to for instance produce a measure of the clustering of people, to better figure out where to place a transit stop, or facilities, and other undiscovered uses for network science. In that regard, I hope to see more innovation in this space.

References

- Nobahary, S., Garakani, H.G., Khademzadeh, A. et al. Selfish node detection based on hierarchical game theory in IoT. J Wireless Com Network 2019, 255 (2019). https://doi.org/10.1186/s13638-019-1564-4

-

Y. Sun, Y. Guo, Y. Ge, S. Lu, J. Zhou and E. Dutkiewicz, “Improving the Transmission Efficiency by Considering Non-Cooperation in Ad Hoc Networks,” in The Computer Journal, vol. 56, no. 8, pp. 1034-1042, Aug. 2013, doi: 10.1093/comjnl/bxt042.